アクセラレータ付きマルチコア上でのリアルタイム制御計算の自動並列化・低消費電力化コンパイラ技術

1.5K Views

July 24, 23

スライド概要

2023/7/13「自動運転におけるAIコンピューティング」

発表者:笠原 博徳先生(早稲田大学 教授・IEEE Computer Society President 2018)

関連スライド

各ページのテキスト

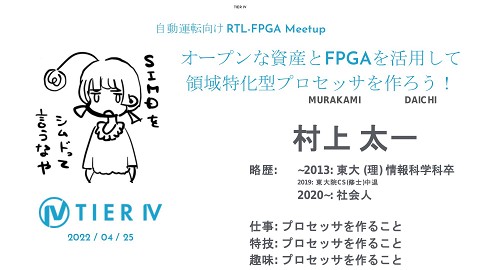

アクセラレータ付きマルチコア上でのリアルタイム制御計算の 自動並列化・低消費電力化コンパイラ技術 早稲田大学 理工学術院 情報理工学科 教授 笠原博徳 グリーンコンピューティング機構 アドバンストマルチコアプロセッサ研究所長 IEEE Computer Society President 2018, 早稲田大学副総長(2018-2022) 1976 早稲田大学高等学院卒 1980 早大電気工学科卒、1982 同修士課程了 1985 早大大学院博士課程了 工学博士,学振第一回PD カリフォルニア大学バークレー客員研究員 1986 早大理工専任講師、1988年 助教授 1989-1990 イリノイ大学Center for Super computing R&D客員研究員 1997 教授、現在 理工学術院情報理工学科 2004 アドバンストマルチコア研究所所長 2017 日本工学アカデミー会員、 日本学術会議連携会員 2018 IEEE Computer Society会長、 早大副総長(-2022年9月) 2019-2023 産業競争力懇談会(COCN) 理事 2020-日本工学アカデミー理事 【受賞】 1987 IFAC World Congress Young Author Prize 1997 情報処理学会坂井記念特別賞 2005 半導体理工学研究センタ共同研究賞 2008 LSI・オブ・ザ・イヤー 2008 準グランプリ、 Intel Asia Academic Forum Best Research Award 2010 IEEE CS Golden Core Member Award 2014 文部科学大臣表彰科学技術賞研究部門 2015 情報処理学会フェロー 2017 IEEE Fellow, IEEE Eta-Kappa-Nu 2019 IEEE CS Spirit of Computer Society Award 2020 情報処理学会功績賞、SCAT表彰 会長大賞 2023 IEEE Life Fellow 査読付き論文232件、招待講演234件、国際特許取得67件(米・英・中・ 日等)、新聞・Web記事・TV等メディア掲載 698件 【政府・学会委員等】 歴任数 295件 IEEE Computer Society President 2018、Executive Committee委員長、理事(2009-14)、戦略的計画委員長、 Nomination Committee委員長、Multicore STC 委員長、 IEEE CS Japan委員長、IEEE技術委員、IEEE Medal選定委員、 ACM/IEEE SC’21基調講演選定委員等 【経済産業省・NEDO】情報家電用マルチコア・アドバンスト並列化コンパイ ラ・グリーンコンピューティング・プロジェクトリーダ、NEDOコンピュータ戦略委 員長等 【内閣府】スーパーコンピュータ戦略委員、政府調達苦情検討委員、総合科 学技術会議情報通信PT 研究開発基盤領域&セキュリティ・ソフト検討委員、 日本国際賞選定委員 【文部科学省・海洋研】地球シミュレータ(ES)中間評価委員、情報科学技 術委員、HPCI計画推進委員、次世代スパコン(京)中間評価委員・概念 設計評価委員、地球シミュレータES2導入技術アドバイザイリー委員長等、 JST: ムーンショットG3ロボット&AI Vice Chair, SBIRフェーズ1委員長等 TIER IV Workshop 2023 自動運転におけるAIコンピューティング, 2023年7月13日(木), 18:15-18:55 1

Some of papers in and just after Ph.D. Course in Waseda U. Courtesy of dexchao - Fotolia.com 2 2

The First Compiler Codesigned Multiprocessor OSCAR (Optimally Scheduled Advanced Multiprocessor) in 1987 AMD29325 32-bit Floating-point unit H. Kasahara, "OSCAR Fortran Multigrain Compiler", Stanford University, Hosted by Professor John L. Hennessy and Professor Monica Lam, May. 15. 1995. 3

Cedar Supercomputer University of Illinois at Urbana-Champaign, CSRD (Center for Supercomputing R&D) Prof. David Kuck Univ. Illinois at Urbana-Champaign Prof. Emeritus Founder of Kuck & Associates ILLIAC IV (Member) Parallelizing Compiler Data dependence analysis Automatic vectorization Automatic loop parallelization Loop restructuring Cedar supercomputer (Alliant FX8) OpenMP Intel Senior Fellow (Past CTO): Intel Parallel Tuning Tools & Debuggers National Academy of Engineering Member IEEE ACM Eckert-Mauchly Award IEEE Charles Babbage Award ACM/IEEE Ken Kennedy Award Okawa Prize IEEE Computer Pioneer Award 4

1993年 スーパーコンピュータVPP500、数値風洞(NWT) Mr. Hajime Miyoshi ACM/IEEE SC ‘94: Washington, D.C. November, 1994にて発表 商用VPP5000 (仏気象庁他) 5

Earth Simulator 2021年ノーベル物理学賞 プリンストン大 真鍋淑郎先生 大気・海洋大循環モデル (http://www.es.jamstec.go.jp/) • Earth Environmental simulation like Global Warming, El Nino, PlateMovement for the all lives onr this planet. •Developed in Mar. 2002 by STA (MEXT) and NEC with 400 M$ investment under Dr. Miyoshi’s direction. Mr. Hajime Miyoshi (Dr.Miyoshi: Passed away in Nov.2001. NWT, VPP500, SX6) 40 TFLOPS Peak (40*1012 ) 4 Tennis Courts 35.6 TFLOPS Linpack June 2002 Top1 Cores: 5,120, Rmax:35.86TFlop/s Rpeak: 40.96TFlop/s, Power: 3.2MW 6 6

理化学研究所 神戸ポートアイランド 10PFLOPS 京のアーキテクチャ “K” Supercomputer by Riken No.1 in TOP500, June 20 & Nov.2,2011 TOFUネットワーク SPARC64TM VIIIfx (提供:富士通(株)) 7

OSCAR Parallelizing Compiler To improve effective performance, cost-performance and software productivity and reduce power Multigrain Parallelization(LCPC1991,2001,04) coarse-grain parallelism among loops and subroutines (2000 on SMP), near fine grain parallelism among statements (1992) in addition to loop parallelism Data Localization Automatic data management for distributed shared memory, cache and local memory Data Transfer Overlapping(2016 partially) Data transfer overlapping using Data Transfer Controllers (DMAs) CPU1 CPU2 DRP0 CPU3 CORE DTU MTG1 MT1-1 MT1-3 MTG2 MT2-1 MT2-2 LOAD LOAD MT1-2 LOAD LOAD SEND SEND LOAD SEND LOAD SEND LOAD LOAD LOAD SEND OFF MTG3 LOAD LOAD MT2-3 MT3-2 LOAD LOAD MT2-5 OFF OFF MT3-1 SEND LOAD LOAD SEND LOAD MT2-7 MT2-4 MT3-3 SEND SEND LOAD MT3-4 SEND OFF OFF MT1-4 MT2-6 LOAD SEND TIME (Local Memory 1995, 2016 on RP2,Cache2001,03) Software Coherent Control (2017) CPU0 CORE DTU CORE DTU CORE DTU CORE DTU SEND LOAD SEND MT3-6 MT3-5 LOAD MT2-8 STORE MT3-7 STORE OFF MT3-8 SEND STORE STORE Power Reduction (2005 for Multicore, 2011 Multi-processes, 2013 on ARM) Reduction of consumed power by compiler control DVFS and Power gating with hardware supports. 8

Performance of APC Compiler on IBM pSeries690 16 Processors High-end Server • IBM XL Fortran for AIX Version 8.1 – – – Sequential execution : -O5 -qarch=pwr4 Automatic loop parallelization : -O5 -qsmp=auto -qarch=pwr4 OSCAR compiler : -O5 -qsmp=noauto -qarch=pwr4 (su2cor: -O4 -qstrict) 12.0 3.1s 3.4s 3.0s 10.0 3.5 times speedup in average XL Fortran(max) 28.8s APC(max) 38.5s 8.0 3.8s 6.0 28.9s 4.0 35.1s 27.8s 39.5s 22.5s 85.8s 126.5s 18.8s 22.5s 85.8s 18.8s 105.0s 184.8s 126.5s 307.6s 291.2s 279.1s 282.4s 321.4s 282.4s 321.4s sw im 2k mg rid 2k ap pl u 2k s ix t ra ck ap s i2 k 30.3s 37.4s 107.4s fpp pp wa ve 5 wu pw i se 21.5s 23.2s ap si 38.3s 21.0s tur b3 d 23.1s 115.2s 13.0s ap pl u 21.5s mg rid 19.2s 16.4s su 2c or hy dro 2d 0.0 16.7s sw im 2.0 7.1s tom ca tv Speedup ratio 3.5s 9

Performance of Multigrain Parallel Processing for 102.swim on IBM pSeries690 16.00 XLF(AUTO) 14.00 OSCAR Speed up ratio 12.00 10.00 8.00 6.00 4.00 2.00 0.00 1 2 3 4 5 6 7 8 9 10 Processors 11 12 13 14 15 16 10

NEC/ARM MPCore Embedded 4 core SMP g77 oscar 4.5 4 speedup ratio 3.5 3 2.5 2 1.5 1 0.5 0 1 2 3 toamcatv 4 1 2 3 swim 4 1 2 3 su2cor 4 1 2 3 hydro2d 4 1 2 3 mgrid 4 1 2 3 applu 4 1 2 3 4 turb3d SPEC95 3.48 times speedup by OSCAR compiler against sequential processing 11

6 Compiler options for the Intel Compiler: for Automation parallelization: -fast -parallel. for OpenMP codes generated by OSCAR: -fast -openmp Intel Ver.10.1 OSCAR 4 3 2 1 spec95 • apsi applu mgrid swim wave5 fpppp apsi turb3d applu mgrid hydro2d su2cor swim 0 tomcatv speedup ratio 5 Performance of OSCAR compiler on 16 cores SGI Altix 450 Montvale server spec2000 OSCAR compiler gave us 2.32 times speedup against Intel Fortran Itanium Compiler revision 10.1 12

Distributed Shared Memory Architecture (ccNUMA) • Each processor has Local Memory. • All memory is logically shared. • Local memory access is faster than remote memory access. • Programing is easy like shared memory architectures. • Scalable like distributed memory architectures. D. Lenoski, J. Laudon, K Gharachorloo, A. Gupta, J. Hennessy, “The directory-based cache coherence protocol for the DASH multiprocessor,” ACM SIGARCH Computer Architecture News 18 (2SI), 148-159, 1990 H. Kasahara, "OSCAR Fortran Multigrain Compiler", Stanford University, Hosted by Professor John L. Hennessy and Professor Monica Lam, May. 15. 1995. 13

What Are the Most Cited ISCA Papers? by David Patterson on Jun 15, 2023 https://www.sigarch.org/what-are-the-most-cited-isca-papers/ 14

15

110 Times Speedup against the Sequential Processing for GMS Earthquake Wave Propagation Simulation on Hitachi SR16000 (Power7 Based 128 Core Linux SMP) (LCPC2015) Fortran:15 thousand lines First touch for distributed shared memory and cache optimization over loops are important for scalable speedup 16 16

RIKEN/Fujitsu 富岳(Fugaku) : Tofu Interconnect 6次元メッシュ/トーラス (6D Mesh/Torus) https://www.fujitsu.com/jp/about/businesspolicy/tech/fugaku/ 理研富岳スーパーコンピュータ 2020年6月から2021年11月まで世界No.1 RIKEN Center for Computational Science, Fujitsu (arm based processor) Cores:7,299,072; Memory:4,866,048GB; Processor:A64FX 48Cores, 2.2GHz Interconnect: Tofu interconnect D Linpack (Rmax)415,530 TFlop/s; Theoretical Peak (Rpeak): 513PFLOPS HPCG [TFlop/s]13,366.4; Power: 28.3MW 48コア/チップ, 2.2GHz, 7 nm FinFET, 約730万コア, 28MW 理論最高性能:51京回浮動小数点演算/秒, 2020年6月時点 https://www.r-ccs.riken.jp/en/fugaku/about/ https://japanese.engadget.com/arm-super-computer-fugaku-top-500-034015910.html 17

Fugaku A64FX TSMCの7 nm CMOS Process SVE(Scalable Vector Extension): 2 Floating Units(FLA/FLB): 512 bit SIMD, Multiply & Add operations/ Cycle, 1,536 Double Precision Floating Operations Per Cycle on a chip 12 Cores + 1 Assistant core L2: 8MiB, 16way, Line: 256B https://www.computer. org/csdl/magazine/mi/2 022/02/09658212/1zw1 njCTTeU Memory Architecture of A64FX CPU: Tag Directory based CC-NUMA A64FXパイプライン Pipeline in A64FX https://www.fujitsu.com/jp/about/resources/publications/technicalreview/2020-03/article03.html 18

https://www.top500.org/ June 2023 2021 ACM A.M. Turing Award https://amturing.acm.org/award_winners/dongarra_3406337.cfm No. 1 June 2022-23 870万プロセッサ, 22.7MW Frontier - HPE Cray EX235a, 8,699,904 total cores, AMD Optimized 3rd Generation EPYC 64C 2GHz, AMD Instinct MI250X accelerators, HPE Slingshot-11 interconnect 168京回演算/秒 Oak Ridge National Laboratory (ORNL) , USA Rmax: 1.19 (ExaFlop/s), Rpeak 1.68(ExaFlop/s) HPCG:14,054[TFlop/s] 19 19

ムーアの法則の終焉 (End of Moore’s Law) ムーアの法則(Moore‘s law): インテル創業者の一人であるゴードン・ムーア(Gordon E. Moore: IEEE Computer Pioneer Award)が、1965年の論文で提唱した経験則: “半導体の集積率は18か月で2倍になる” “Transistors on a chip 2× every 1.5 year” ILRAM I$ Core#0 URAM DLRAM D$ Core#2 SNC0 LBSC コンピュータの高性能化と低消費電力化にはマルチコアが必須 Core#1 Power ∝ Frequency * Voltage2 Core#3 Power ∝ Frequency3 Core#4 DBSC CSM Core#6 SNC1 SHWY Core#7 (Voltage ∝ Frequency) VSWC Core#5 GCPG DDRPAD IEEE ISSCC08: Paper No. 4.5, M.ITO, … and H. Kasahara, “An 8640 MIPS SoC with Independent Power-off Control of 8 CPUs and 8 RAMs by an Automatic Parallelizing Compiler” 周波数 Frequency を1/4にすると (Ex. 4GHz1GHz), 性能は 1/4、消費電力は 1/64 Performance 1/4, Power 1/64 <マルチコア(Multicore)> 8cores をチップに集積すると, Performance 2 timesでPowerは 1/8 20

PCR3 PCR2 PCR1 PCR0 16K FPU 16K I$ D$ Local CPU FPU 16K 16K I$ D$ I:8K,CCN D:32K Local 16K 16K memory I$ D$ I:8K, D:32K Local BAR 64K User 16K 16KRAM memory Local memory I:8K, D:32K64K User RAM memory I:8K, D:32K64K User RAM URAM 64K Snoop controller 1 LCPG0 Core #3 CoreCPU #2 FPU Core #1 CPU FPU I$ D$ CoreCPU #0 Barrier Sync. Lines Snoop controller 0 Cluster #0 8 Core RP2 Chip Block Diagram Cluster Core #7 #1 Core #6 FPU CPU Core #5 CPU FPU D$ I$Core #4 CPU FPU 16K 16K D$ I$ LCPG1 CPU FPU 16K 16K D$ I$ I:8K, D:32K 16K D$16K CCN I$ PCR7 I:8K, D:32K BAR User RAM 64K 16K 16K PCR6 Local memory I:8K, D:32K User RAM 64K I:8K, D:32K User RAM 64K PCR5 URAM 64K PCR4 On-chip system bus (SuperHyway) LCPG: Local clock pulse generator DDR2 SRAM DMA PCR: Power Control Register control control control CCN/BAR:Cache controller/Barrier Register URAM: User RAM (Distributed Shared Off-chip On-chip Shared Shared Memory) Memory Memory 21

Power Reduction of MPEG2 Decoding to 1/4 on 8 Core Homogeneous Multicore RP-2 by OSCAR Parallelizing Compiler MPEG2 Decoding with 8 CPU cores 7 Without Power Control (Voltage:1.4V) 7 6 6 5 5 4 4 3 3 2 2 1 1 0 0 With Power Control (Frequency, Resume Standby: Power shutdown & Voltage lowering 1.4V-1.0V) Avg. Power 73.5% Power Reduction Avg. Power 5.73 [W] 1.52 [W] 22

23

Demo of NEDO Green Multicore Processor for Real Time Consumer Electronics at Council of Science and Engineering Policy on April 10, 2008 http://www8.cao.go.jp/cstp/gaiyo/honkaigi/74index.html Codesign of Compiler and Multiprocessor Architecture since 1985 Prime Minister FUKUDA is touching our multicore chip during execution. 24

IEEE Computer Society The first President from outside North America in 72 years history of IEEE CS IEEE754, 802 2525

Seymour Cray: Father of Supercomputers using vector pipeline 2019 Seymour Cray Award Winner: David Kirk, NVIDIA Corporation (retired) https://www.youtube.com/watch?v=Yc-VFuRWevw 26

https://www.computer.org/product/education/multi-core-video-lectures-bundle Created by Multicore STC Chair Hironori Kasahara 27

太陽光電力で動作する情報機器 コンピュータの消費電力をHW&SW協調で低減。電源喪失時でも動作することが可能。 リアルタイムMPEG2デコードを、8コアホモジニアス マルチコアRP2上で、消費電力1/4に削減 世界唯一の差別化技術 7 (W) 6 電力制御無し 5 4 省電力分 3 2 1 0 電力制御無し 平均電力 5.73 [W] 電力を ソフトで 1/4に 削減 太陽電池で駆動可 電力制御有 平均電力 周波数/電圧・電源遮断制御 1.52 [W] Waseda University 28 28

Power Reduction by Power Supply, Clock Frequency and Voltage Control by OSCAR Compiler • Shortest execution time mode 29

Power Reduction Scheduling 30

Low-Power Optimization with OSCAR API Scheduled Result by OSCAR Compiler VC1 VC0 Generate Code Image by OSCAR Compiler void void main_VC0() { main_VC1() { MT2 MT2 MT1 MT1 Sleep #pragma oscar fvcontrol ¥ ((OSCAR_CPU(),0)) Sleep #pragma oscar fvcontrol ¥ (1,(OSCAR_CPU(),100)) MT3 MT4 MT4 MT3 } } 31

Multicore Program Development Using OSCAR API V2.0 Hetero Homogeneous (Consumer Electronics, Automobiles, Medical, Scientific computation, etc.) Manual parallelization / power reduction Accelerator Compiler/ User Add “hint” directives before a loop or a function to specify it is executable by the accelerator with how many clocks Waseda OSCAR Parallelizing Compiler Coarse grain task parallelization Data Localization DMAC data transfer Power reduction using DVFS, Clock/ Power gating Hitachi, Renesas, NEC, Fujitsu, Toshiba, Denso, Olympus, Mitsubishi, Esol, Cats, Gaio, 3 univ. OSCAR API for Homogeneous and/or Heterogeneous Multicores and manycores Directives for thread generation, memory, data transfer using DMA, power managements Parallelized API F or C program Proc0 Code with directives Thread 0 Proc1 Code with directives Thread 1 Accelerator 1 Code Accelerator 2 Code Generation of parallel machine codes using sequential compilers Low Power Homogeneous Multicore Code Generation Existing API Analyzer sequential compiler Low Power Heterogeneous Multicore Code Generation API Existing Analyzer sequential (Available compiler from Waseda) Homegeneous Multicore s from Vendor A (SMP servers) Heterogeneous Multicores from Vendor B Server Code Generation OpenMP Compiler OSCAR: Optimally Scheduled Advanced Multiprocessor API: Application Program Interface Executable on various multicores Sequential Application Program in Fortran or C Shred memory servers 32

Earliest Executable Condition Analysis for Coarse Grain Tasks (Macro-tasks) A Macro Flow Graph A Macro Task Graph 33

Generation of Coarse Grain Tasks Macro-tasks (MTs) Block of Pseudo Assignments (BPA): Basic Block (BB) Repetition Block (RB) : natural loop Subroutine Block (SB): subroutine Program BPA Near fine grain parallelization RB Loop level parallelization Near fine grain of loop body Coarse grain parallelization SB Total System 1st. Layer Coarse grain parallelization 2nd. Layer BPA RB SB BPA RB SB BPA RB SB BPA RB SB BPA RB SB BPA RB SB 3rd. Layer 34

MTG of Su2cor-LOOPS-DO400 Coarse grain parallelism PARA_ALD = 4.3 DOALL Sequential LOOP SB BB 35

36

Data Localization MTG MTG after Division A schedule for two processors 37

Intel Xeon E5-2650v4 – Benchmark results on upto 8 cores x86-64 based Architecture, 12 Cores, 2.2 GHz – 2.9 GHz 30 MiB shared L3 cache, L3 Cache: Shared by all cores speedup to sequential version Speedup to Sequential gcc as backend 8 7 6 5 4 3 2 1 0 1 Core 2 Cores 4 Cores 8 Cores BT CG SP art equake MPEG2 swim swim shows superlinear speedup and 1 core speedup seq.: 58.1 2021/10/14 s, 1 core OSCAR: 33.2 s, 4 core OSCAR: 10.5 s 38

AMD EPYC 7702P – Benchmark results on upto 8 cores x86-64 based Architecture, 64 Cores, 2.0 GHz – 3.35 GHz 16 MiB L3 cache per 4 core cluster, shared within the cluster speedup to sequential version gcc as backend Speedup to Sequential 10 9 8 7 6 5 4 3 2 1 0 1 Core 2 Cores 4 Cores 8 Cores BT CG SP art equake MPEG2 swim CG and swim show superlinear speedup CG macrotask graph CG: seq.: 0.86 s, 8 core OSCAR: 0.09 s L3 cache Optimization by Data Localization 39 39

NVIDIA Carmel ARMv8.2 – Benchmark results on upto 4 cores Arm v8.2 based Architecture, 6 Cores, 1.4 GHz 4 MiB shared L3 cache, L3 Cache: Shared across all cores speedup to sequential version gcc as backend 3 1 Core 2 Cores 4 Cores Speedup to Sequential 2.5 2 1.5 1 0.5 0 BT CG SP art equake MPEG2 swim overall good speedup is observed equake: seq.: 19.0 s, 4 core OSCAR: 7.18 s 40

SiFive Freedom U740 – Benchmark results on upto 4 cores RISC-V based Architecture, 4 Cores, 1.2 GHz 2 MiB shared L2 cache L2 Cache: Shared across all cores speedup to sequential version gcc as backend Speedup to Sequential 4.5 4 3.5 3 2.5 2 1.5 1 0.5 0 1 Core 2 Cores 4 Cores BT CG SP art equake MPEG2 swim overall good speedup is observed, swim superlinear BT: seq.: 2041 s, 4 core OSCAR: 551 s 2021/10/14 41

日本乗用車のエンジン制御計算をデンソー2コアECU 上で、1.95倍の速度向上に成功。 (見神、梅田) 欧州農耕作業車エンジン制御計算をインフィニオン 2コアプロセッサ上で8.7倍の高速化に成功。 42

Macro Task Fusion for Static Task Scheduling : Data Dependency : Control Flow : Conditional Branch Merged block Only data dependency Fuse branches and succeeded tasks MFG of sample program before maro task fusion MFG of sample program after macro task fusion MTG of sample program after macro task fusion 43

OSCAR Compile Flow for MATLAB/Simulink Generate C code using Embedded Coder Simulink model OSCAR Compiler (1) Generate MTG → Parallelism (2) Generate gantt chart → Scheduling in a multicore C code (3) Generate parallelized C code using the OSCAR API → Multiplatform execution (Intel, ARM and SH etc) 44

Speedups of MATLAB/Simulink Image Processing on Various 4core Multicores (Intel Xeon, ARM Cortex A15 and Renesas SH4A) Road Tracking, Image Compression : http://www.mathworks.co.jp/jp/help/vision/examples Buoy Detection : http://www.mathworks.co.jp/matlabcentral/fileexchange/44706-buoy-detection-using-simulink Color Edge Detection : http://www.mathworks.co.jp/matlabcentral/fileexchange/28114-fast-edges-of-a-color-image--actual-color--not-convertingto-grayscale-/ Vessel Detection : http://www.mathworks.co.jp/matlabcentral/fileexchange/24990-retinal-blood-vessel-extraction/ 45

46

OSCAR Heterogeneous Multicore • DTU • LPM • LDM • DSM • CSM • FVR – – – – – – Data Transfer Unit Local Program Memory Local Data Memory Distributed Shared Memory Centralized Shared Memory Frequency/Volta ge Control Register 47

OSCAR API Ver. 2.0 for Homogeneous/Heterogeneous Multicores and Manycores (LCPC2009 Homogeneous, 2010 Heterogeneous) Specification: http://www.kasahara.cs.waseda.ac.jp/api/regist.php?lang=en&ver=2.1 48

An Image of Static Schedule for Heterogeneous Multicore with Data Transfer Overlapping and Power Control CPU0 MTG1 CORE DTU MT1-1 LOAD LOAD LOAD SEND MT1-3 MTG2 MT2-1 CPU1 CORE MT1-2 DTU CPU2 CORE DTU SEND LOAD LOAD SEND OFF MT3-1 LOAD LOAD LOAD LOAD DTU OFF OFF MTG3 MT2-3 SEND MT3-2 MT3-3 LOAD LOAD LOAD SEND SEND MT2-5 SEND MT2-7 MT3-4 SEND MT2-4 MT2-6 SEND MT3-6 MT3-5 MT2-8 STORE MT3-7 STORE LOAD LOAD LOAD LOAD SEND TIME SEND OFF CORE DTU OFF MT1-4 MT2-2 CORE LOAD LOAD SEND SEND LOAD LOAD DRP0 CPU3 OFF MT3-8 SEND STORE STORE 49

Automatic Local Memory Management Data Localization: Loop Aligned Decomposition • Decomposed loop into LRs and CARs – LR ( Localizable Region): Data can be passed through LDM – CAR (Commonly Accessed Region): Data transfers are required among processors Multi-dimension Decomposition Single dimension Decomposition 50

Speedups by OSCAR Automatic Local Memory Management compared to Executions Utilizing Centralized Shared Memory on Embedded and Scientific Application on RP2 8core Multicore Maximum of 20.44 times speedup on 8 cores using local memory against sequential execution using off-chip shared memory 51

AIREC (AI-driven Robot for Embrace and Care) Led by Prof. Sugano Supported by Japanese Government ”Moonshot” Project from 2020 人生に寄り添うAIロボット:育児,家事,家業補助,看護,遠隔診断,介護 caring sick persons helping house business helping remote diagnosis Universal Smart Robot cares Family’s Life-time from baby shitting to elderly care as a Family member. caring elderly persons caring children house keeping 52

Prof. Keiji Kimura is developing a new custom chip. 53

ソーラーパワー・パーソナル・スパコン:新アクセラレータ・グリーンマルチコア (AI、ビッグデータ、自動運転車、交通制御、ガン治療、地震、ロボット) 世界最高性能・低電力化機能OSCARコンパイラとの協調 主メモリ チップ間クロスバー/可変バリア/マルチキャスト ベクトルアクセラレータ併置・ 共有メモリ型マルチコアシステム 性能 : 8TFLOPS, 主メモリ : 8TB 電力: 40W, 効率 : 200GFLOPS/W マルチコアチップ チップ内共有メモリ(3次元実装) え チップ内クロスバー/可変バリア/マルチキャスト コア ローカルメモリ・分散共有メモリ (3次元実装・一部不揮発) 開発ベクト ル・アクセラ レータ * DTUデータ 転送装置 プロセッサ IBM, ARM, Intel, ルネサス 電力制御ユニット *アクセラレータ特許はJST特許群支援認定 64コア ×4 チップ 命令拡張なくどのプロセッサにも付加でき るベクトルアクセラレータ 低消費電力で高速に立ち上がるベクト ルで、低コスト設計 コンパイラによる自動ベクトル・並列化及 び自動電力削減 周波数・電源電圧制御機能 バリア高速同期・ローカル分散メモリで 無駄削減 ローカルメモリ利用で低メモリコスト 誰でもチューニングなく使用でき、低コス ト短期間ソフト開発可能 54

グリーン・コンピューティング: 環境に優しい低消費電力・高性能計算 生命・SDGs への貢献 環境への貢献 カーボンニュートラル OSCAR 制御 交通シミュレーション・信号 制御 NTTデータ・日立 データセンター: 100WM(火力発電所必要) 1000MW=1GW(原子力発電所必要) グリーンスパコン 笠原博徳 車載(エンジン制御・ 自動運転Deep Learning・ ADAS・MATLAB/Simulink 自動並列化)デンソー, ルネサス,NEC HPC,AI,BigData高速化・低消費電力化 OSCARマルチコア/サーバ 産 &コンパイラ OSCAR 業 OS D円 カプセル内視 鏡オリンパス Many-core Accelerator API Software 生 活 医 療 木村啓二 グリーンデータ・クラウドサーバ゙ 災 害 パーソナル゚ スパコン 首都圏直下型地震火災延焼、 住民避難指示 スマホ 高信頼・低コスト・ ソフト開発 太陽光駆動 世界の人々への貢献 安全安心便利な製品・サービス (産官学連携・ベンチャー) 重粒子ガン治療日立 新幹線 車体設計・ ディープ ラーニング・ 日立 高速化 OSCAR FA 三菱 カメラ 低消費電力化 早稲田大学グリーンコンピューティングセンター 55